Cloud convection

This page describes the cloud convection (aka wet convection) algorithms of GEOS-Chem. We also invite you to read our Wet deposition wiki page, which contains information about the algorithms used for scavenging of soluble tracers.

Overview

From Section 2, paragraph 10 of Wu et al [2007]:

A major difference between the GEOS-3, GEOS-4, and GISS models is the treatment of wet convection. GEOS-3 [and now also GEOS-5, ed.] uses the Relaxed Arakawa-Schubert convection scheme [Moorthi and Suarez, 1992]. GEOS-4 has separate treatments of deep and shallow convection following the schemes developed by Zhang and McFarlane [1995] and Hack [1994]. The convection scheme in the GISS GCM was described by Del Genio and Yao [1993]. Unlike the GEOS models, the GISS GCM allows for condensed water in the atmosphere (i.e., condensed water is not immediately precipitated), resulting in frequent nonprecipitating shallow convection. In the wet deposition scheme, we do not scavenge soluble species from shallow convective updrafts at altitudes lower than 700 hPa in the GISS-driven model, whereas we do in the GEOS-driven model [Liu et al., 2001].

Updraft scavenging of soluble tracers (as applied to both the Relaxed Arakawa and Hack/Zhang-McFarlane schemes in GEOS-Chem) is described in Section 1 of Jacob et al [2000] and in Section 2.3.1 of Liu et al [2001].

--Bob Y. 13:13, 19 February 2010 (EST)

Relaxed Arakawa-Schubert scheme

This is the convection scheme that GEOS-Chem uses with both GEOS-3 and GEOS-5 meteorology. The source code for this scheme is located in routine NFCLDMX in convection_mod.f.

Hack and Zhang-McFarlane schemes

This is the convection scheme that GEOS-Chem uses with both GEOS-4 and GCAP meteorology.

GEOS-4

The source code for the convection scheme required for GEOS-4 meteorology is contained within the F90 module fvdas_convect_mod.f. The main driver is called FVDAS_CONVECT. This routine calls the following routines:

- ARCONVTRAN: Prepares arrays for use by CONVTRAN

- CONVTRAN: Main driver for deep convection (i.e. Zhang/McFarlane scheme)

- HACK_CONV: Main driver for shallow convection (i.e. Hack scheme)

GISS

The source code for the convection scheme required for GISS/GCAP meteorology is contained within the F90 module gcap_convect_mod.f. The main driver is called GCAP_CONVECT. This routine calls the following routines:

- ARCONVTRAN: Prepares arrays for use by CONVTRAN

- CONVTRAN: Main driver for deep convection (i.e. Zhang/McFarlane scheme)

There is no equivalent routine to HACK_CONV for the GCAP meterology. Instead, ARCONVTRAN and CONVTRAN are called with entraining mass fluxes, and then again with non-entraining mass fluxes.

--Bob Y. 13:11, 19 February 2010 (EST)

Validation

See Bey et al [2001], Liu et al [2001], and Wu et al [2007].

References

- Allen, D.J, R.B. Rood, A.M. Thompson, and R.D. Hidson, Three dimensional 222Rn calculations using assimilated data and a convective mixing algorithm, J. Geophys. Res, 101, 6871-6881, 1986a.

- Allen, D.J. et al, Transport induced interannual variability of carbon monoxide using a chemistry and transport model, 101, J. Geophys. Res, 28,655-28-670, 1986b.

- Bey I., D. J. Jacob, R. M. Yantosca, J. A. Logan, B. Field, A. M. Fiore, Q. Li, H. Liu, L. J. Mickley, and M. Schultz, Global modeling of tropospheric chemistry with assimilated meteorology: Model description and evaluation, J. Geophys. Res., 106, 23,073-23,096, 2001. PDF

- Del Genio, A. D., and M. Yao, Efficient cumulus parameterization for long-term climate studies: The GISS scheme, in The Representation of Cumulus Convection in Numerical Models, Meteorol. Monogr., 46, 181–184, 1993.

- Hack, J.J., Parameterization of moist convection in the National Center for Atmospheric Research Community Climate Model (CCM2), <u<J. Geophys. Res., 99, 5551–5568, 1994.

- Jacob, D.J. H. Liu, C.Mari, and R.M. Yantosca, Harvard wet deposition scheme for GMI, Harvard University Atmospheric Chemistry Modeling Group, revised March 2000.

- Liu, H., et al. (2001), Constraints from 210Pb and 7Be on wet deposition and transport in a global three-dimensional chemical tracer model driven by assimilated meteorological fields, J. Geophys. Res., 106, 12,109–12,128. PDF

- Moorthi, S., and M. J. Suarez, Relaxed Arakawa-Schubert: A parameterization of moist convection for general circulation models, Mon. Weather Rev., 120, 978– 1002, 1992.

- Wu, S., L.J. Mickley, D.J. Jacob, J.A. Logan, R.M. Yantosca, and D. Rind, Why are there large differences between models in global budgets of tropospheric ozone?, J. Geophys. Res., 112, D05302, doi:10.1029/2006JD007801, 2007. PDF

- Zhang, G. J., and N. A. McFarlane, Sensitivity of climate simulations to the parameterization of cumulus convection in the Canadian Climate Centre general circulation model, Atmos. Ocean, 33(3), 407–446, 1995.

--Bob Y. 13:11, 19 February 2010 (EST)

Previous issues that are now resolved

Computational bottleneck in the v11-01 convection module

This update was included in v11-02a and approved on 12 May 2017.

After the v11-01 public release was issued, the GCST discovered a computational bottleneck in the convection module. The following DO loop at line 343 of GeosCore/convection_mod.F was not parallelized:

! Loop over advected species

DO NA = 1, nAdvect

! Species ID

N = State_Chm%Map_Advect(NA)

! Now point to a 3D slice of the FSOL array

p_FSOL => FSOL(:,:,:,NA)

! Fraction of soluble species

CALL COMPUTE_F( am_I_Root, N, p_FSOL, ISOL(NA),

& Input_Opt, State_Met, State_Chm, RC )

! Free pointer memory

p_FSOL => NULL()

ENDDO

The loop was probably left unparallelized due to an former issue that has probably since been resolved.

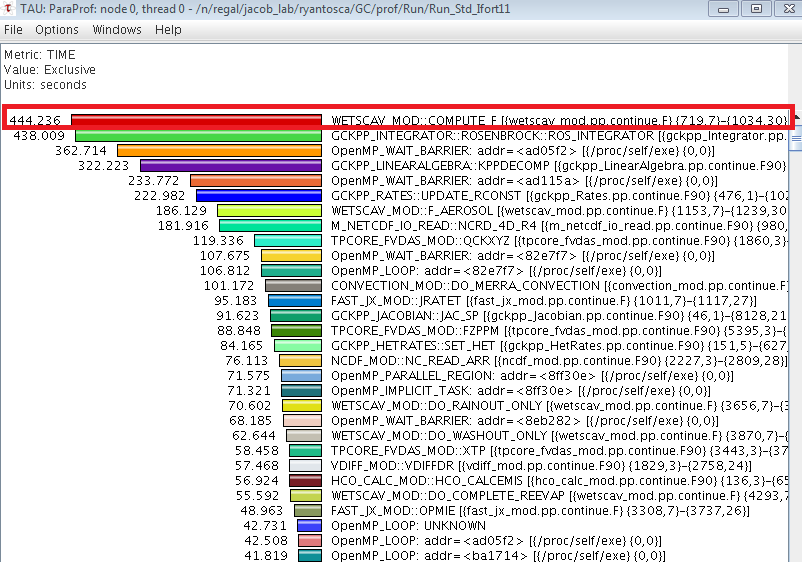

Bob Yantosca profiled the GEOS-Chem v11-01-public code with the TAU performance analyzer, using a 2-model-day simulation with 8 CPUs. The results are shown below:

As you can see, because the COMPUTE_F routine was not being called from a parallel loop, the subroutine was only being executed on the master CPU. This caused a slowdown because the other CPUs were being forced to wait until COMPUTE_F finished before the simulation could proceed.

The solution was to parallelize the DO loop, in order to obtain a better load balancing. We added the code in GREEN:

! Loop over advected species !$OMP PARALLEL DO !$OMP+DEFAULT( SHARED ) !$OMP+PRIVATE( NA, N, p_FSOL, RC ) DO NA = 1, nAdvect ... etc ... ENDDO !$OMP END PARALLEL DO

Parallellizing the loop indeed removed the bottleneck:

File:V11-01-thread0-parallelized.png

After parallelizing the loop, the COMPUTE_F routine now only spends 14 seconds on the master CPU, instead of ~400 seconds. This means that the work of calling COMPUTE_F is now being efficiently split up among all 8 CPUs. This should result in a significant speedup.

The GCST will add this fix as a patch to the v11-01 public release, as well as into the v11-02a development stream. We have performed some timing tests that show the impact of this speedup (as well as the speedup caused by the improved P/L diagnostic in KPP that will go also into v11-02a).

| Run name, timesteps, and submitter |

Machine or Node and Compiler |

CPU vendor | CPU model | Speed [MHz] | # of CPUs |

CPU time | Wall time | CPU / Wall ratio |

% of ideal |

|---|---|---|---|---|---|---|---|---|---|

| v11-01-public (C20T10) Bob Yantosca |

regal16.rc.fas.harvard.edu ifort 11.1 |

GenuineIntel | Intel(R) Xeon(R) CPU E5-2660 0 @ 2.20GHz | 2199.915 | 8 | 62554.07 s 17:22:34 |

9355.80 s 02:35:59 |

6.6861 | 83.58 |

| v11-02a-P/L (C20T10) Melissa Sulprizio |

regal17.rc.fas.harvard.edu ifort 11.1 |

GenuineIntel | Intel(R) Xeon(R) CPU E5-2660 0 @ 2.20GHz | 2199.993 | 8 | 55853.62 s 15:30:54 |

8373.14 s 02:19:44 1.12X faster than v11-01-public |

6.6706 | 83.38 |

| v11-02a-P/L+COMPUTE_F (C20T10) Melissa Sulprizio |

regal17.rc.fas.harvard.edu ifort 11.1 |

GenuineIntel | Intel(R) Xeon(R) CPU E5-2660 0 @ 2.20GHz | 2199.993 | 8 | 55774.58 s 15:29:35 |

7666.69 s 02:07:52 1.22X faster than v11-01-public |

7.2749 | 90.94 |

--Bob Yantosca (talk) 19:26, 9 February 2017 (UTC)

Resolve very high tracer concentrations in MERRA and GEOS-FP convective scavenging

This update was validated with 1-month benchmark simulation v11-01d and 1-year benchmark simulation v11-01d-Run1. This version was approved on 12 Dec 2015.

Viral Shah wrote:

- I am running v10.01, nested-grid NA full-chemistry w/ SOA simulation with the GEOS-FP met fields, and have found instances of extremely high concentrations of certain tracers that develop all of a sudden. I traced this back to a bug (highlighted in RED) in the DO_MERRA_CONVECTION (in module GeosCore/convection_mod.F) routine at the following:

! Check if the tracer is an aerosol or not

IF ( AER == .TRUE. ) THEN

!---------------------------------------------------------

! Washout of aerosol tracers

! This is modeled as a kinetic process

!---------------------------------------------------------

! Define ALPHA, the fraction of raindrops that

! re-evaporate when falling from (I,J,L+1) to (I,J,L)

ALPHA = ( REEVAPCN(K) * AD(K) )

& / ( PDOWN(K+1) * AREA_M2 * 10e+0_fp )

! ALPHA2 is the fraction of the rained-out aerosols

! that gets resuspended in grid box (I,J,L)

ALPHA2 = 0.5e+0_fp * ALPHA

- Here, the value of ALPHA, which should be less than or equal to 1, is sometimes much higher than 1, and for the attached example was >10^5. Since ALPHA is used to calculate resuspension of precipitating mass from above, these high values of ALPHA lead to a gain in tracer mass at the lower levels in excess of what is coming from above. I suggest we replace the RED code with the following piece of code in GREEN which is used elsewhere in the DO_MERRA_CONVECTION routine.

! %%%% CASE 1 %%%%

! Partial re-evaporation. Less precip is leaving

! the grid box then entered from above.

IF ( PDOWN(K+1) > PDOWN(K) .AND.

& PDOWN(K) > TINYNUM ) THEN

! Define ALPHA, the fraction of raindrops that

! re-evaporate when falling from grid box

! (I,J,L+1) to (I,J,L)

ALPHA = ( REEVAPCN(K) * AD(K) )

& / ( PDOWN(K+1) * AREA_M2 * 10e+0_fp )

! For safety

ALPHA = MIN( ALPHA, 1e+0_fp )

! ALPHA2 is the fraction of the rained-out aerosols

! that gets resuspended in grid box (I,J,L)

ALPHA2 = 0.5e+0_fp * ALPHA

ENDIF

! %%%% CASE 2 %%%%

! Total re-evaporation. Precip entered from above,

! but no precip is leaving grid box (ALPHA = 2 so

! that ALPHA2 = 1)

IF ( PDOWN(K) < TINYNUM ) THEN

ALPHA2 = 1e+0_fp

ENDIF

- A second bug I noticed is in the lines in RED right below the first one. The current code is as follows:

! GAINED is the rained out aerosol coming down from

! grid box (I,J,L+1) that will evaporate and re-enter

! the atmosphere in the gas phase in grid box (I,J,L).

GAINED = T0_SUM * ALPHA2

! Amount of aerosol lost to washout in grid box

WETLOSS = Q(K,IC) * BMASS(K) / TCVV *

& WASHFRAC - GAINED

! LOST is the rained out aerosol coming down from

! grid box (I,J,L+1) that will remain in the liquid

! phase in grid box (I,J,L) and will NOT re-evaporate.

LOST = T0_SUM - GAINED

! Update tracer concentration (V. Shah, mps, 5/20/15)

Q(K,IC) = Q(K,IC) - WETLOSS * TCVV / BMASS(K)

- In this case, the amount of tracer GAINED is (mistakenly) not added to Q before WETLOSS is calculated. The fix for the bold faced line should be as follows:

WETLOSS = ( Q(K,IC) * BMASS(K) / TCVV + GAINED ) *

& WASHFRAC - GAINED

- This will be consistent with the code (below) for the 'non-aerosol' case

! MASS_WASH is the total amount of non-aerosol tracer

! that is available for washout in grid box (I,J,L).

! It consists of the mass in the precipitating

! part of box (I,J,L), plus the previously rained-out

! tracer coming down from grid box (I,J,L+1).

! (Eq. 15, Jacob et al, 2000).

MASS_WASH = ( F_WASHOUT * Q(K,IC) ) * BMASS(K) /

& TCVV + T0_SUM

! WETLOSS is the amount of tracer mass in

! grid box (I,J,L) that is lost to washout.

! (Eq. 16, Jacob et al, 2000)

WETLOSS = MASS_WASH * WASHFRAC - T0_SUM

--Melissa Sulprizio (talk) 16:43, 14 September 2015 (UTC)

Fixed bug in DO_MERRA_CONVECTION affecting mass conservation

These updates were validated in the 1-month benchmark simulation v11-01a, which was approved on 07 Jul 2015.

Mass conservation tests validating the change in tracer units within cloud convection revealed a discrepancy in mass conservation between using GEOS-5 and GEOS-FP. Comparison of subroutine DO_MERRA_CONVECTION, the convection routine used for GEOS-FP, and subroutine NFCLDMX, the convection routine used for GEOS-5, showed that a calculation in DO_MERRA_CONVECTION was incorrectly outside of the internal timestep loop.

In DO_MERRA_CONVECTION, the quantity QB, the average mixing ratio in the column below the cloud base, is calculated in two steps:

- the numerator of QB is calculated by summing the tracer concentrations weighted by pressure and assigned to variable QB_NUM

- QB is calculated by dividing QB_NUM by the pressure difference across all levels below the cloud base.

While tracer concentrations were updated every internal timestep, QB_NUM was calculated prior to the timestep loop and therefore was not updated with the adjusted concentrations. As a result, mass was only conserved during the first internal timestep.

To correct this problem, we made these modifications in subroutine DO_MERRA_CONVECTION, located in module GeosCore/convection_mod.F:

- Change the declaration of QB_NUM from an array to a scalar.

- Move the initialization and calculation of QB_NUM to within the timestep loop just prior to the calculation of QB. Change QB_NUM from an array to a scalar as follows:

! Calculate QB_NUM, the numerator for QB. QB is the

! weighted average mixing ratio below the cloud base.

! QB_NUM is equal to the grid box tracer concentrations

! [kg/kg total air] weighted by the adjacent level pressure

! differences and summed over all levels up to just

! below the cloud base (ewl, 6/22/15)

QB_NUM = 0e+0_fp

DO K = 1, CLDBASE-1

QB_NUM = QB_NUM + Q(K,IC) * DELP(K)

ENDDO

! Compute QB, the weighted avg mixing ratio below

! the cloud base [kg/kg total air]

QB = QB_NUM / ( PEDGE(1) - PEDGE(CLDBASE) )

With these updates, the mass variance for GEOS-FP within convection is the same order of magnitude as for GEOS-5. For the case of CO2 with emissions turned off and initial values of 370 ppm globally, the mass fluctuation is a few hundred kg globally over a few days.

--Lizzie Lundgren (talk) 21:50, 24 June 2015 (UTC)

--Bob Y. (talk) 17:55, 7 July 2015 (UTC)

Reduce time spent in routine DO_CONVECTION when using GEOS-FP or MERRA

These updates were validated in the 1-month benchmark simulation v10-01c and approved on 29 May 2014.

Using the Tuning and Analysis Utilities (TAU), we were able to make the following modifications to routine DO_CONVECTION (in module file GeosCore/convection_mod.F) that resulted in a speedup in the GEOS-FP/MERRA convection module. We made modifications in the following routines:

- DO_CONVECTION (in module GeosCore/convection_mod.F)

- We no longer use objects from GeosCore/gc_type_mod.F (i.e. OPTIONS, DIMINFO, etc.). These have been largely superseded by the Input_Opt object.

- Remove all pointer references to fields of State_Met and State_Chm from the parallel DO loop.

- Now use the proper # of tracers for APM aerosol microphysics.

- Now declare the parallel DO loop with !$OMP+PRIVATE, which results in better load-balancing.

- DO_MERRA_CONVECTION (in module GeosCore/convection_mod.F):

- Now make the following variables local pointer variables instead of arguments:

- AD, BXHEIGHT, CMFMC, DQRCU, DTRAIN, REEVAPCN, T, Q. These point to the corresponding variables in State_Met or State_Chm.

- H2O2s, SO2s: These point to the corresponding arrays in GeosCore/sulfate_mod.F.

- Now make the following variables local pointer variables instead of arguments:

- COMPUTE_F (in module GeosCore/wetscav_mod.F)

- Now make the F argument of routine COMPUTE_F an assumed-shape array (i.e. F(:,:,:) ). This allows us to pass pointer-valued arguments to

- GeosCore/gc_type_mod.F:

- This has now been moved to the obsolete/ subdirectory.

Also note that with this fix, the total CPU time of this run (1-day with GEOS-4x5 met) dropped from 16:l1 to 15:23 (min:sec). So this fix is making the overall simulation run a little faster for each simulation day.

--Bob Y. 17:09, 30 May 2014 (EDT)

Removed array temporaries in call to FVDAS_CONVECT

These updates were validated in the 1-month benchmark simulation v10-01c and approved on 29 May 2014.

The GEOS-Chem Unit Tester revealed the presence of array temporaries in the call to GEOS-4 convection routine FVDAS_CONVECT. To remove the array temporaries, we made these modifications in subroutine DO_GEOS4_CONVECT, located in module GeosCore/convection_mod.F:

(1) Declare the F variable with the TARGET attribute, as follows:

#if defined( APM )

INTEGER :: INDEXSOL(Input_Opt%N_TRACERS+N_APMTRA)

REAL*8, TARGET :: F (IIPAR,JJPAR,LLPAR,

& Input_Opt%N_TRACERS+N_APMTRA)

#else

INTEGER :: INDEXSOL(Input_Opt%N_TRACERS)

REAL*8, TARGET :: F (IIPAR,JJPAR,LLPAR,

& Input_Opt%N_TRACERS)

#endif

(2) Add pointer variables p_STT and p_F:

REAL*8, POINTER :: p_STT (:,:,:,:) ! Ptr to STT (flipped in vert)

REAL*8, POINTER :: p_F (:,:,:,:) ! Ptr to F (flipped in vert)

(3) Point p_STT to the State_Chm%Tracers field and flip levels in the vertical. Likewise, point p_F to the F array, and also flip in the vertical:

! Point to various fields and flip in vertical. This avoids

! array temporaries in the call to FVDAS_CONVECT (bmy, 4/14/14)

p_HKETA => State_Met%HKETA (:,:,LLPAR:1:-1 )

... etc ...

p_STT => State_Chm%Tracers(:,:,LLPAR:1:-1,:)

p_F => F (:,:,LLPAR:1:-1,:)

(4) Pass p_STT and p_F to subroutine FVDAS_CONVECT:

! Call the fvDAS convection routines (originally from NCAR!)

CALL FVDAS_CONVECT( TDT,

& N_TOT_TRC,

!----------------------------------------------------------------------------

! Prior to 4/14/14:

! Eliminate an array temporary (bmy, 4/14/14)

! & STT (:,:,LLPAR:1:-1,:),

!----------------------------------------------------------------------------

& p_STT,

& RPDEL,

& p_HKETA,

& p_HKBETA,

& p_ZMMU,

& p_ZMMD,

& p_ZMEU,

& DP,

& NSTEP,

!----------------------------------------------------------------------------

! Prior to 4/14/14:

! Eliminate an array temporary (bmy, 4/14/14)

! & F (:,:,LLPAR:1:-1,:),

!----------------------------------------------------------------------------

& p_F,

& TCVV,

& INDEXSOL,

& State_Met )

(5) Nullify the pointers p_STT and p_F at the end of subroutine DO_GEOS4_CONVECT:

! Free pointers

NULLIFY( STT, p_STT, p_F )

--Bob Y. 17:08, 30 May 2014 (EDT)

Fixed minor issues in MERRA cloud convection routine

Jenny Fisher discovered two minor issues in the MERRA convection routine DO_MERRA_CONVECTION (in module convection_mod.f):

ND38 diagnostic issue in routine DO_MERRA_CONVECTION

This update was tested in the 1-month benchmark simulation v9-01-02j and approved on 16 Aug 2011.

Jenny Fisher wrote]:

- The bug is in routine DO_MERRA_CONVECTION. When DIAG38 is first written (around line 2056 in my code, after the comment titled "(3.4) ND38 Diagnostic"), DIAG38 is formed by adding T0 (converted to appropriate units) to the diagnostic each level K. This is correct. However, the second time we add to DIAG38 (below the cloud base, around line 2245 in my code), we add T0_SUM to each level. From what we can tell, T0_SUM represents not the washout from each level but instead the total wet scavenging loss, summed over the entire column. So we are effectively double counting in these levels and adding way too much. [Helen Amos and I] think this should instead be adding WETLOSS.

The fix is below:

IF ( OPTIONS%USE_DIAG38 .and. F(K,IC) > 0d0 ) THEN

DIAG38(K,IC) = DIAG38(K,IC)

!------------------------------------------------------------------------------

! Prior to 8/16/11:

! Now use WETLOSS instead of T0_SUM in the ND38 diagnostic below the cloud

! base. WETLOSS is the loss in this level, but T0_SUM is the loss summed

! over the entire column. Using T0_SUM leads us to over-count the tracer

! scavenged out of the column. (jaf, hamos, bmy, 8/16/11)

! & + ( T0_SUM * AREA_M2 / TCVV_DNS )

!------------------------------------------------------------------------------

& + ( WETLOSS * AREA_M2 / TCVV_DNS )

ENDIF

Wrong IF tests used in routine DO_MERRA_CONVECTION

This update was tested in the 1-month benchmark simulation v9-01-02j and approved on 16 Aug 2011.

NOTE: In GEOS-Chem v11-01 and higher versions, tagged Hg indices are now stored in the species database.

- Routine DO_MERRA_CONVECTION was using tracer flags IDTHg2 and IDTHgP to determine whether routines ADD_Hg2_WD, ADD_HgP_WD, and ADD_Hg2_SNOWPACK should be called. However, IDTHg2 and IDTHgP aren't ever defined and were set to zero, so these routines were never called even when the correct mercury species were used.

The fix is to replace these two lines of code

IF ( IS_Hg .and. IC == IDT%Hg2 ) THEN ... IF ( IS_Hg .and. IC == IDT%HgP ) THEN

with these:

IF ( IS_Hg .and. IS_Hg2( IC ) ) THEN ... IF ( IS_Hg .and. IS_HgP( IC ) ) THEN

--Bob Y. 16:47, 16 August 2011 (EDT)

--Bob Yantosca (talk) 19:12, 6 May 2016 (UTC)