Advection scheme TPCORE: Difference between revisions

| Line 83: | Line 83: | ||

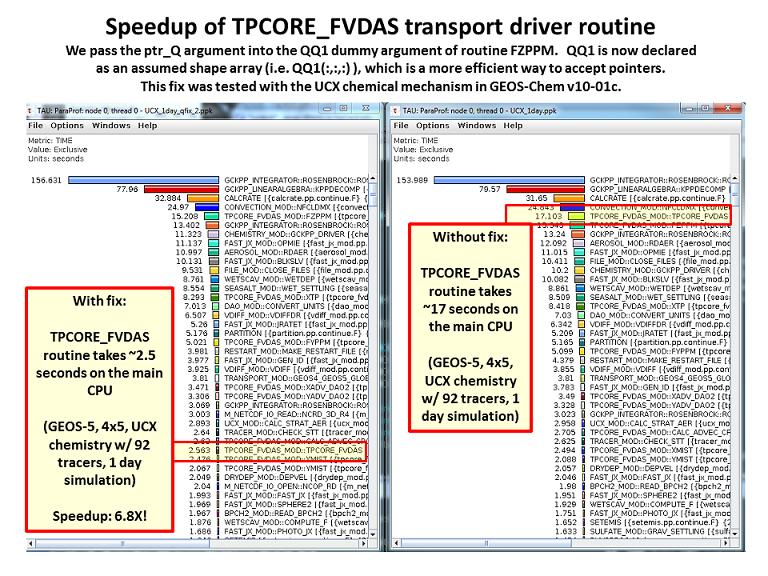

We used the [http://tau.uoregon.edu Tuning and Analysis Utilities (TAU)] to diagnose a performance bottleneck when routine <tt>FZPPM</tt> is called from routine <tt>TPCORE_FVDAS</tt>. Both of these routines are located in module file <tt>GeosCore/tpcore_fvdas_mod.F</tt>. | We used the [http://tau.uoregon.edu Tuning and Analysis Utilities (TAU)] to diagnose a performance bottleneck when routine <tt>FZPPM</tt> is called from routine <tt>TPCORE_FVDAS</tt>. Both of these routines are located in module file <tt>GeosCore/tpcore_fvdas_mod.F</tt>. | ||

Recall that in routine <tt>TPCORE_FVDAS</tt>, we use a pointer to pass a 3-dimensional data slice of the 4-dimensional <tt>Q</tt> array to routine <tt>FZPPM</tt>, as shown here: | Recall that in routine <tt>TPCORE_FVDAS</tt>, we use a pointer variable (<tt>ptr_Q</tt>) to pass a 3-dimensional data slice of the 4-dimensional <tt>Q</tt> array to routine <tt>FZPPM</tt>, as shown here: | ||

! Set up temporary pointer to Q to avoid array temporary in FZPPM | ! Set up temporary pointer to Q to avoid array temporary in FZPPM | ||

Revision as of 21:09, 16 April 2014

On this page we provide information about the TPCORE advection schemes that have been implemented into GEOS-Chem.

Overview

There are a couple of different versions of TPCORE currently implemented into GEOS-Chem.

- TPCORE v7.1

- This version is used with the GEOS-3 met fields. The source code is contained in F90 module tpcore_mod.f.

- GMI TPCORE

- This version is used with the GEOS-4 and GEOS-5 met fields and for GCAP simulations. The source code is contained in the F90 module tpcore_fvdas_mod.f90. This version of TPCORE was taken from the GMI source code.

- TPCORE for GEOS-3 nested grids (1x1)

- This version contains the various modifications for the GEOS-3 nested grids (i.e. how to deal with boundary conditions, etc.). The source code is in F90 module tpcore_window_mod.f. This version was based on TPCORE v7.1 and was modified by Yuxuan Wang..

- TPCORE for GEOS-5 nested grids (0.5x0.666)

- This version contains the various modifications for the GEOS-5 nested grids (i.e. how to deal with boundary conditions, etc.). The source code is in F90 module tpcore_geos5_window_mod.f90. This version was based on a previous implementation of TPCORE (by S-J Lin and Kevin Yeh; this was NOT the GMI implementation), and was modified by Yuxuan Wang and Dan Chen. It shouldn't be used over polar regions.

TPCORE replaced with version based on GMI model

NOTE: The GMI version of TPCORE was implemented into GEOS-Chem v8-01-03.

Dylan Jones and Hongyu Liu both independently found that the existing TPCORE transport code used to perform the advection for GEOS-4 and GEOS-5 meteorology (tpcore_fvdas_mod.f90, by S-J Lin and Kevin Yeh) causes an overshooting in the polar stratopsheric regions. Claire Carouge has supplied a fix for this issue.

Hongyu Liu wrote:

- [Here are] the radon plots for all GEOS series as well as the info on GEOS-Chem version and met field version. This is much less of a problem (or not a problem at all) in GEOS-Chem/GEOS-STRAT and GEOS-Chem/GEOS-3. Actually GEOS-Chem/GEOS-STRAT is very close to GMI/GEOS-STRAT (not shown). Compare plots below. All these simulations use same options for tpcore (IORD=3, JORD=3, KORD=7).

- It seems that GMI does not have this problem. You might want to compare the plots on Pages 7 & 10 of this PDF file.

Dylan Jones wrote:

- Attached are the PowerPoint slides. We added slide 9 which compares the mixed layer depth for GEOS-Chem (with GEOS-5) and GMI (with GEOS-4); we see the same problem in GEOS-Chem with GEOS-5, but we do not see it in GMI.

- All the GEOS-4 GEOS-Chem runs were with version v7-02-04. The GEOS-5 GEOS-Chem runs were with v8-01-01.

Claire Carouge replied:

- I think I finally got tpcore to work. You have some plots attached for Radon. So far, I ran 5 months of simulation and each month looks much better: no polar spike and much much lower stratospheric tracer. The concentration of Radon is never null in the stratosphere but I don't think it is in GMI neither, I think the range on the plot was cut off. So I did the plot with the same cut off. Let me know if you see something strange I haven't seen.

- I don't know for sure what was the problem but I think it was a problem with the definition of the polar cap. In GEOS-Chem, we only averaged values for cells at the poles on 1 band of latitude. In GMI, they use what they call an enlarged polar cap and thus they average values at the poles on 2 bands of latitude.

- The pressure fixer was taken from GMI and thus was written for an enlarged polar cap. The parts of the code that were explicitly labelled for the enlarged polar cap had been removed but not other parts that were not explicitly labelled. So we ended up with a hybrid pressure fixer used with a tpcore with a not enlarged olar cap. I'm guessing this was creating the polar spikes.

- But, the tpcore in GEOS-Chem and GMI are not easy to compare. I'm not sure there was not a difference in the transport itself and I can't be totally sure the pressure fixer was well introduced in tpcore. I have some doubts about this.

- So as GMI was an example of a working (and clean!) algorithm with the enlarged polar cap, I decided to introduce the enlarged polar cap in GEOS-Chem. For this:

- I slightly modified the pressure-fixer to return to the exact version from GMI

- I changed tpcore to the one from GMI as I was sure the pressure fixer was well introduced in (and the algorithm is cleaner).

Dylan Jones wrote:

- Claire,

- Attached are some comparisons of the CO/O3 correlations in the UTLS with the new tpcore.

- The files called *.gmi_geos.png are with the old version of tpcore and those called *.gmi_geos4_tpcore are with the corrected tpcore. As you can see, there is much less scatter in the correlations, which suggest less anomalous mixing with the new code. The scatter is more similar to what we see in GMI. There is still an offset in the stratosphere (for high ozone in the plots), but I think this may be due to linoz rather than to the transport scheme. This is a huge improvement for GEOS-Chem. Thanks very much for fixing it.

Hongyu Liu replied:

- Thanks Claire! Well done. It's clear that the polar overshooting problem is now fixed.

Also see this PDF document for more information about the new TPCORE version as installed in GEOS-Chem v8-01-03.

References

- Lin, S.-J., and R. B. Rood, 1996: Multidimensional flux form semi-Lagrangian transport schemes, Mon. Wea. Rev., 124, 2046-2070.

- Lin, S.-J., W. C. Chao, Y. C. Sud, and G. K. Walker, 1994: A class of the van Leer-type transport schemes and its applications to the moisture transport in a General Circulation Model, Mon. Wea. Rev., 122, 1575-1593.

- Wang, Y.X., M.B. McElroy, D.J. Jacob, R.M. Yantosca, A nested grid formulation for chemical transport over Asia: applications to CO, J. Geophys. Res., 109, D22307, doi:10.1029/2004jd005237, 2004. PDF

- Wu, S., L.J. Mickley, D.J. Jacob, J.A. Logan, R.M. Yantosca, and D. Rind, Why are there large differences between models in global budgets of tropospheric ozone?, J. Geophys. Res., 112, D05302, doi:10.1029/2006JD007801, 2007. PDF

--Bob Y. 16:03, 19 February 2010 (EST)

Previous issues that are now resolved

Reduce time spent in routine TPCORE_FVDAS

NOTE: This update is being validated with 1-month benchmark simulation GEOS-Chem v10-01c.

We used the Tuning and Analysis Utilities (TAU) to diagnose a performance bottleneck when routine FZPPM is called from routine TPCORE_FVDAS. Both of these routines are located in module file GeosCore/tpcore_fvdas_mod.F.

Recall that in routine TPCORE_FVDAS, we use a pointer variable (ptr_Q) to pass a 3-dimensional data slice of the 4-dimensional Q array to routine FZPPM, as shown here:

! Set up temporary pointer to Q to avoid array temporary in FZPPM

! (bmy, 6/5/13)

ptr_Q => q(:,:,:,iq)

! ==========

call Fzppm &

! ==========

(klmt, delp1, wz, dq1, ptr_Q, fz(:,:,:,iq), &

j1p, 1, jm, 1, im, 1, jm, &

im, km, 1, im, 1, jm, 1, km)

! Free pointer memory (bmy, 6/5/13)

NULLIFY( ptr_Q )

However, the dummy argument in routine FZPPM that receives pointer slice ptr_Q had been declared with explicit dimension bounds:

! Species concentration [mixing ratio]

REAL*8, INTENT(IN) :: qq1(ILO:IHI, JULO:JHI, K1:K2)

Long story short: as described on our [Passing array arguments efficiently in GEOS-Chem]] wiki page, passing a pointer variable (such as ptr_Q) to a subroutine via a dummy argument that has explicit dimension bounds (such as qq1) is VERY INEFFICIENT, and can cause a significant slowdown.

To remove this performance bottleneck, we simply had to rewrite the declaration of argument qq1 in the FZPPM routine as follows:

! Species concentration [mixing ratio] !----------------------------------------------------------------------------- ! Prior to 4/16/14: ! QQ1 accepts a pointer argument, so it should --Bob Y. 17:08, 16 April 2014 (EDT)be declared as assumed-shape, ! which is more efficient of memory (bmy, 4/16/14) ! REAL*8, INTENT(IN) :: qq1(ILO:IHI, JULO:JHI, K1:K2) !----------------------------------------------------------------------------- REAL*8, INTENT(IN) :: qq1(:,:,:)

Making this fix significantly reduces the amount of time it takes to pass the spent in the routine TPCORE_FVDAS, as shown in the following plot.

--Bob Y. 17:08, 16 April 2014 (EDT)

Now pass arguments to transport routines more efficiently

NOTE: The following modifications were added to GEOS-Chem v9-02k, which was approved on 07 Jun 2013.

In GEOS-Chem v9-02, we have rewritten sevaral subroutine calls in order to more efficiently pass data to the TPCORE routines. Please see these wiki posts for detailed information:

- Passing array arguments efficiently in GEOS-Chem

- Eliminating array temporaries in tpcore_mod.F90

- Elmininating array temporaries in tpcore_geos5_window_mod.F90 (and tpcore_geos57_window_mod.F90)

--Bob Y. 15:28, 10 October 2013 (EDT)